<a href="http://www.shutterstock.com/gallery-809503p1.html?cr=00&pl=edit-00">Twin Design</a>/Shutterstock

Emotional contagion is when people subconsciously take on the emotions of those around them. It’s when happy people are around sad people and then feel rather down themselves. Or when sad people are in happy crowds and suddenly just want to dance. Like so many things in real life, this happens on the internet as well. Your emotional state converges with the general feeling of your Twitter feed or your Facebook friends. This is how humans work, it’s how we’re wired, and it’s nothing to lose sleep over.

What may in fact be worth losing sleep over is that Facebook just admitted to intentionally manipulating people’s emotions by selectively choosing which type of their friends’ posts—positive or negative—appeared in their News Feed.

Take it away, Next Web:

The company has revealed in a research paper that it carried out a week-long experiment that affected nearly 700,000 users to test the effects of transferring emotion online.

The News Feeds belonging to 689,003 users of the English language version were altered to see “whether exposure to emotions led people to change their own posting behaviors,” Facebook says. There was one track for those receiving more positive posts, and another for those who were exposed to more emotionally negative content from their friends. Posts themselves were not affected and could still be viewed from friends’ profiles, the trial instead edited what the guinea pig users saw in their News Feed, which itself is governed by a selective algorithm, as brands frustrated by the system can attest to.

Facebook found that the emotion in posts is contagious. Those who saw positive content were, on average, more positive and less negative with their Facebook activity in the days that followed. The reverse was true for those who were tested with more negative postings in their News Feed.

Ok, let’s break some stuff down:

Can they do this?

Yes. You agree to let the company use its information about you for “data analysis, testing, research and service improvement” when you agree to without reading the terms of service. It’s the “research” bit that’s relevant.

Should they?

I don’t know! There are clearly some ethical questions about it. A lot of people are pretty outraged. Even the editor of the study thought it was a bit creepy.

Should I quit Facebook?

You’re not going to quit Facebook.

No, really. I might.

You’re not going to quit Facebook.

You don’t even know me. I really might quit. No joke. I have my finger on the button. I saw an ad for a little house out in the country. No internet. No cell service. I could sell everything and go there and live a quite, deliberate life by a pond. I could be happy there in that stillness.

Cool, so, I personally am not going to quit Facebook. That seems to me to be an overreaction. But I do not presume to know you well enough to advise you on this matter.

(You’re not going to quit Facebook.)

Anything else?

Yes, actually!

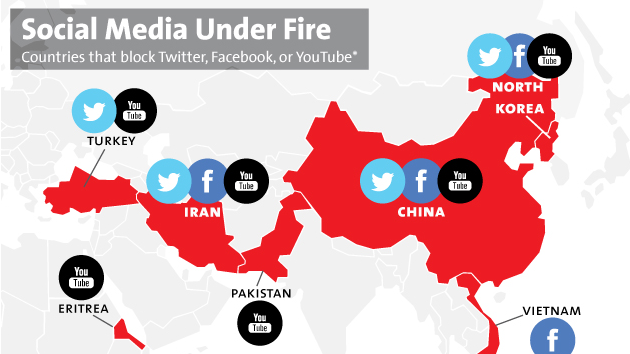

Earlier this year there was a minor brouhaha over the news that USAID had introduced a fake Twitter into Cuba in an attempt to foment democracy. It didn’t work and they pulled the plug. Let’s dress up and play the game pretend: If Facebook has the power to make people arbitrarily happy or sad, it could be quite the force politically in countries where it has a high penetration rate. (Cuba isn’t actually one of those countries. According to Freedom House, only 5% of the population has access to the World Wide Web.)

Economic confidence is one of the biggest factors people consider when going to vote. What if for the week before the election your News Feed became filled with posts from your unemployed friends looking for work? Not that Mark Zuckerburg and co. would ever do that, but they could!

Have fun, conspiracy theorists!