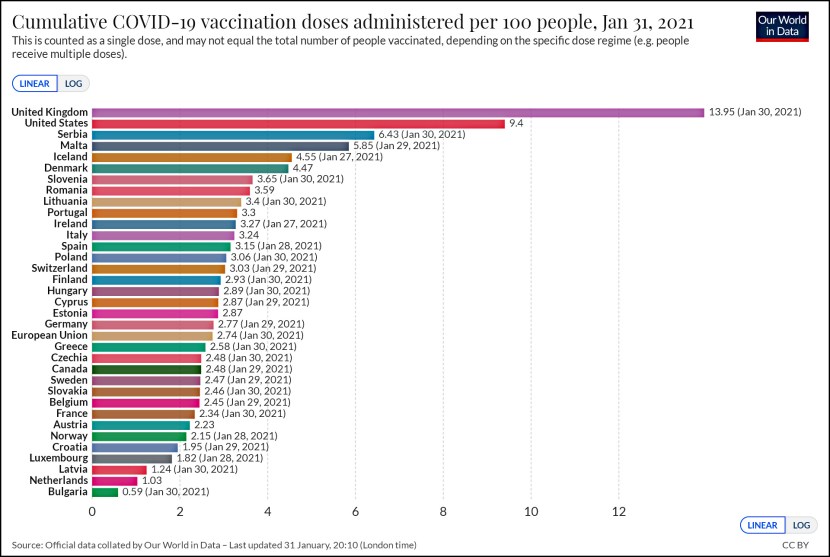

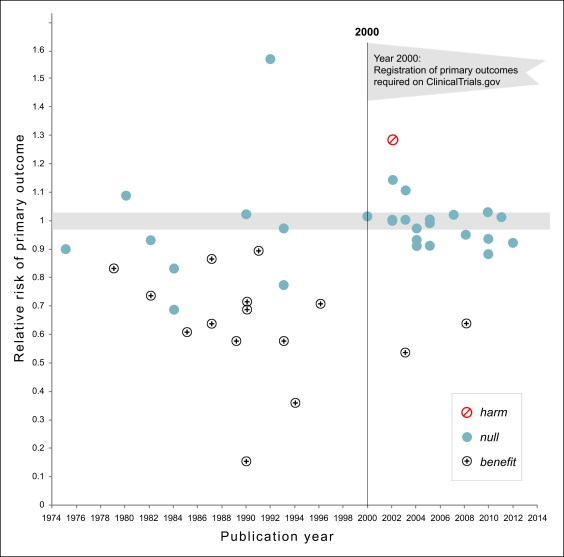

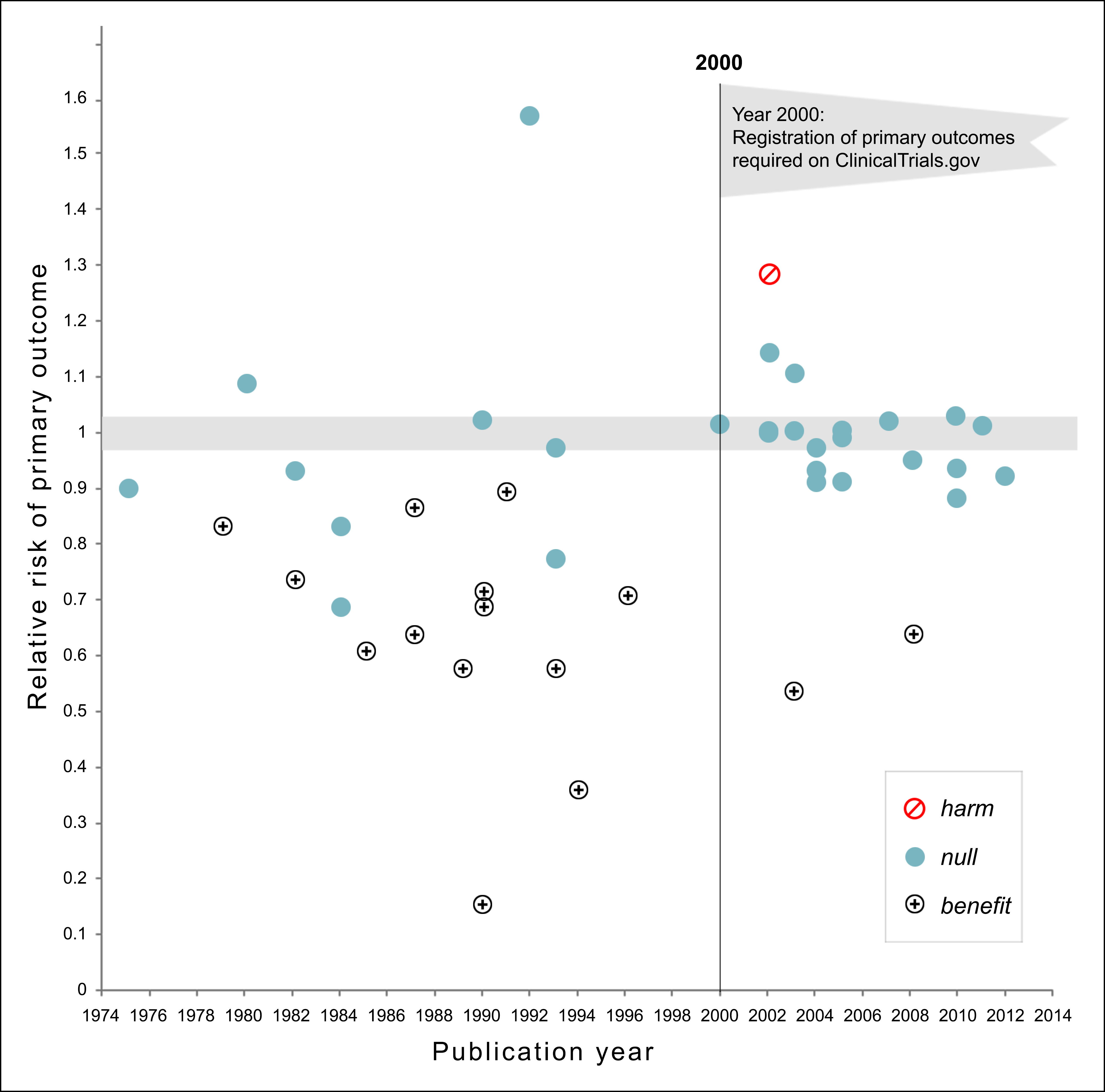

This chart is three years old, but it may be one of the greatest charts ever produced. Seriously. Via John Holbein, here it is:

Let me explain. The authors collected every significant clinical study of drugs and dietary supplements for the treatment or prevention of cardiovascular disease between 1974 and 2012. Then they displayed them on a scatterplot.

Prior to 2000, researchers could do just about anything they wanted. All they had to do was run the study, collect the data, and then look to see if they could pull something positive out of it. And they did! Out of 22 studies, 13 showed significant benefits. That’s 59 percent of all studies. Pretty good!

Then, in 2000, the rules changed. Researchers were required before the study started to say what they were looking for. They couldn’t just mine the data afterward looking for anything that happened to be positive. They had to report the results they said they were going to report.

And guess what? Out of 21 studies, only two showed significant benefits. That’s 10 percent of all studies. Ugh. And one of the studies even demonstrated harm, something that had never happened before 2000

Reports for all-cause mortality were similar. Before 2000, 5 out of 24 trials showed reductions in mortality. After 2000, not a single study showed a reduction in mortality. Here’s the adorable way the authors summarize their results:

The number of NHLBI trials reporting positive results declined after the year 2000. Prospective declaration of outcomes in RCTs, and the adoption of transparent reporting standards, as required by clinicaltrials.gov, may have contributed to the trend toward null findings.

Let me put this into plain English:

Before 2000, researchers cheated outrageously. They tortured their data relentlessly until they found something—anything—that could be spun as a positive result, even if it had nothing to do with what they were looking for in the first place. After that behavior was banned, they stopped finding positive results. Once they had to explain beforehand what primary outcome they were looking for, practically every study came up null. The drugs turned out to be useless.

Is this because scientists are under pressure from pharmaceutical companies to show positive results, and before 2000 they did exactly that? Or is it because scientists just like reporting positive results if they can? After all, who wants to spend years of their life on a bit of research that ends up being a nothingburger? I guess we’ll never know. But one thing we do know: we need to keep as sharp an eye on scientists as we do on anyone else, especially if there’s a lot of money at stake. When we don’t, they’re just as vulnerable to pressure and hopeful thinking as anyone.