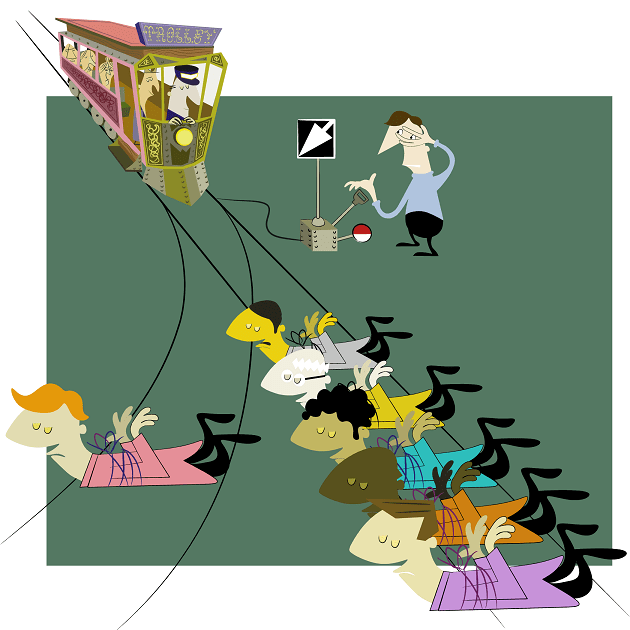

The trolley dilemma<a href="http://www.flickr.com/photos/jholbo/5022382134/">John Holbo</a>/Flickr

Maybe you already know the famous hypothetical dilemma: A train is barreling down a track, about to hit five people, who are certain to die if nothing happens. You are standing at a fork in the track and can throw a switch to divert the train to another track—but if you do so, one person, tied to that other track, will die. So what would you do? And moreover, what do you think your fellow citizens would do?

The first question is a purely ethical one; the second, however, can be investigated scientifically. And in the past decade, a group of researchers have been pursuing precisely that sort of investigation. They’ve put our sense of right and wrong in lab, and even in the fMRI machine. And their findings have begun to dramatically illuminate how we make moral and political decisions and, perhaps, will even reshape our understanding of what morality is in the first place.

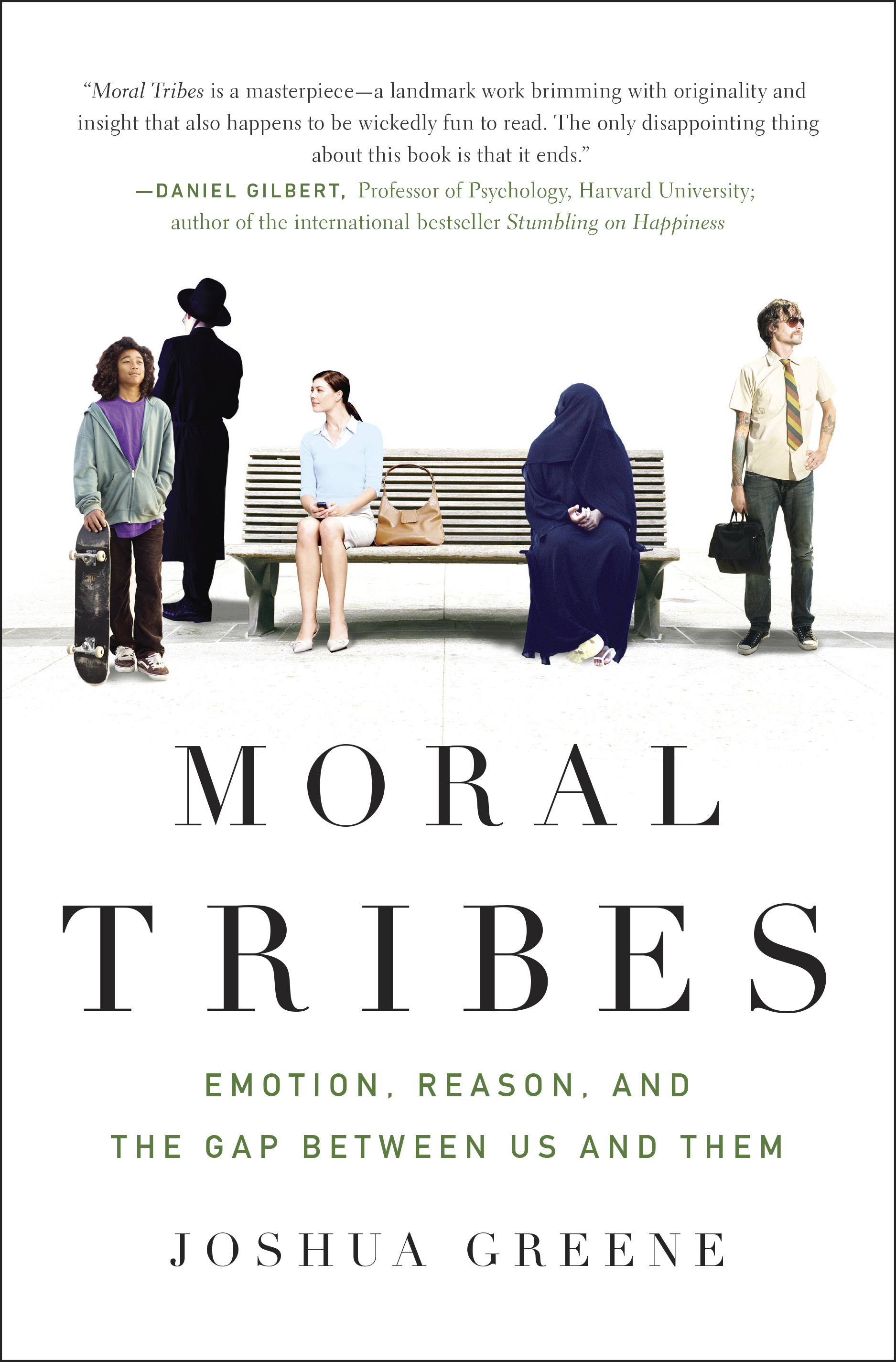

“The core of morality is a suite of psychological capacities that enable us to get along in groups,” explains Harvard’s Joshua Greene, a leader in this research and author of the new book Moral Tribes: Emotion, Reason, and the Gap Between Us and Them, on the latest episode of the Inquiring Minds podcast (listen above). The word “group” here is essential: According to Greene, while we have innate dispositions to care for one another, they’re ultimately limited and work best among smallish clans of people who trust and know each other.

The morality that the globalizing world of today requires, Greene argues, is thus quite different from the morality that comes naturally to us. To see how he reaches this conclusion, let’s go through some surprising facts from Greene’s research and from the science of morality generally:

1. Evolution gave us morality—as a default setting. One central finding of modern morality research is that humans, like other social animals, naturally feel emotions, such as empathy and gratitude, that are crucial to group functioning. These feelings make it easy for us to be good; indeed, they’re so basic that, according to Greene’s research, cooperation seems to come naturally and automatically.

Greene and his colleagues have shown as much through experiments in which people play something called the “Public Goods Game.” A group of participants are each given equal amounts of tokens or money (say $5 each). They are then invited to place some of their money a shared pool, whose amount is increased each round (let’s say doubled) and then redistributed evenly among players. So if there are four players and everybody is fully cooperative, $20 goes into the pool and $40 comes out, and everybody doubles their money, taking away $10. However, participants can also hold on to their money and act as a “free rider,” taking earnings out of the group pot even though they put nothing in.

In one Public Goods Game experiment, Greene and his colleagues decided to speed the process up. They made people play the game faster, decide what to do quicker. And the result was more “moral” behavior and less free-riding, suggesting that cooperation is a default. “We have gut reactions that make us cooperative,” Greene says. Indeed, he adds, “If you force people to stop and think, then they’re less likely to be cooperative.”

2. Gossip is our moral scorecard. In the Public Goods Game, free riders don’t just make more money than cooperators. They can tank the whole game, because everybody becomes less cooperative as they watch free riders profit at their expense. In some game versions, however, a technique called “pro-social punishment” is allowed. You can pay a small amount of your own money to make sure that a free rider loses money for not cooperating. When this happens, cooperation picks up again—because now it is being enforced.

Real life isn’t a Public Goods Game, but it is in many ways analogous. We also keep tabs and enforce norms through punishment; in Moral Tribes, Greene suggests that a primary way that we do so is through gossip. He cites the anthropologist Robin Dunbar, who found that two-thirds of human conversations involve chattering about other people, including spreading word of who’s behaving well and who’s behaving badly. Thus do we impose serious costs on those who commit anti-social behavior.

3. We’re built to solve the problem of “me versus us.” We don’t know how to deal with “us versus them.” Cooperation, enforcing beneficial social norms: These are some of the relatively positive aspects of our basic morality. But the research also shows something much less rosy. For just as we’re naturally inclined to be cooperative within our own group, we’re also inclined to distrust other groups (or worse). “In-group favoritism and ethnocentrism are human universals,” writes Greene.

What that means is that once you leave the setting of a small group and start dealing with multiple groups, there’s a reversal of field in morality. Suddenly, you can’t trust your emotions or gut settings any longer. “When it comes to us versus them, with different groups that have different feelings about things like gay marriage, or Obamacare, or Israelies versus Palestinians, our gut reactions are the source of the problem,” says Greene. From an evolutionary perspective, morality is built to make groups cohere, not to achieve world peace.

4. Morality varies regionally and culturally. This is further exacerbated by the fact that in different cultures and in different groups, there is subtle (and sometimes, not-so-subtle) variation in moral norms, making outside groups seem tougher to understand and sympathize with.

Take, again, the Public Goods Game. People play it differently around the world, Greene reports. The most cooperative people (that have been studied, at least) live in Boston and Copenhagen; they make high contributions in the game almost from the start, and stay that way. By contrast, in Athens and Riyadh, it’s the opposite pattern: People contribute low and this doesn’t change (in this version of the game, free riders are allowed to punish cooperators too, and this seems to account for the outcome). There are also a variety of cities in between, like Seoul and Melbourne, where contributions start out moderate or low and trend higher as free riders are punished and norms get enforced. “There are very different expectations in different places about what the terms of cooperation are,” Greene says. “About what people, especially strangers, owe each other.” (It is important to note that Greene does not attribute these regional differences to evolution or genetics; they’re cultural.)

5. Your brain is not in favor of the greatest good for the greatest number. In addition to the Public Goods Game, Greene and colleagues also experiment with a scenario called the “trolley dilemma,” described above. So what do people do when asked whether it is moral to take one life in order to save five?

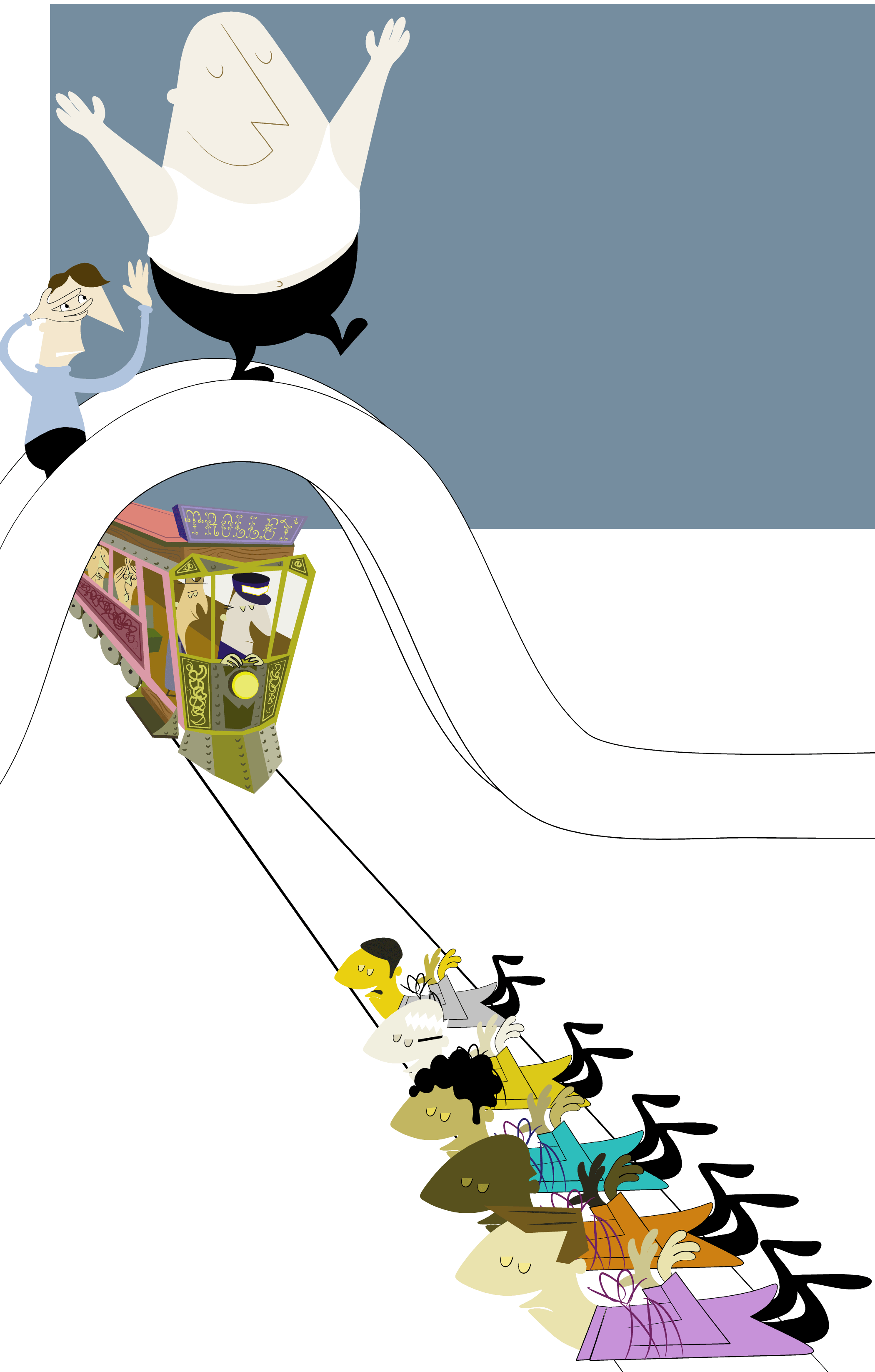

The footbridge dilemma John Holbo/Flickr

The footbridge dilemma John Holbo/FlickrThe answer is that for the most part, people placed in this hypothetical dilemma choose utilitarianism, or the greatest good for the greatest number. They say they want to divert the train, sacrificing one person to save five.

However, things become very different when instead of the standard trolley dilemma, researchers substitute a variant called the “footbridge dilemma,” illustrated at right. Now, the trolley is barreling down the track again, but you’re in a different position: Atop a bridge over the tracks, alongside a large man. The only way to stop the train is to push him off the bridge, onto the tracks in front of it. The man is big enough to stop the train, and so once again, you’ll save five lives by sacrificing one. The math is exactly the same, but people respond very differently—mostly, they just won’t push the man or say it is okay to do so. “So the question is,” Greene says, “why do we say it’s okay to trade one life to save five here, but not there?”

Greene and fellow researchers looked to neuroscience to find out. It turns out that the two dilemmas activate different parts of the brain, and “there’s an emotional response that makes most people say no to pushing the guy off the footbridge, that competes with the utilitarian rationale,” Greene says. However, this is not true in certain patients with damage to certain regions of the brain. People with damage to the ventromedial prefrontal cortex, a part of the brain that processes emotions, are more likely to treat the two dilemmas in the same way, and make more utilitarian decisions. Meanwhile, when people do make utilitarian judgments in the trolley dilemma, Greene’s research suggests that their brains shift out of an emotional and automatic mode and into a more deliberative mode, activating different brain regions associated with conscious control.

6. Humanity may, objectively, be becoming more moral. Based on many experiments with Public Goods Games, trolleys, and other scenarios, Greene has come to the conclusion that we can only trust gut-level morality to do so much. Uncomfortable scenarios like the footbridge dilemma notwithstanding, he believes that something like utilitarianism, which he defines as “maximize happiness impartially,” is the only moral approach that can work with a vast, complex world comprised of many different groups of people.

But to get there, Greene says, requires the moral version of a gut override on the part of humanity—a shift to “manual mode,” as he puts it.

The surprise, then, is that he’s actually pretty optimistic. It is far easier now than it ever was, Greene says, to be aware that your moral obligations don’t end where your small group ends. We all saw this very recently, with the global response to the devastation caused by Super Typhoon Haiyan in the Philippines. We’re just more conscious, in general, of what is happening to people very distant from us. What’s more, intergroup violence seems to be on the decline. Here Greene cites the recent work of his Harvard colleague Steven Pinker, who has documented a long-term decline of violence across the world in modern times.

To be more moral, then, Greene believes that we must first grasp the limits of the moral instincts that come naturally to us. That’s hard to do, but he thinks it gets collectively easier.

“There’s a bigger us that’s growing,” Greene says. “Wherever you go, there are tribal forces that oppose that larger us. But, the larger us is growing.”

To listen to the full episode of Inquiring Minds with Joshua Greene, you can stream below:

This episode of Inquiring Minds, a podcast hosted by best-selling author Chris Mooney and neuroscientist and musician Indre Viskontas, also features a discussion of surprising new research on the causes of autism, and on the genetic origins of our political and religious beliefs.

To catch future shows right when they are released, subscribe to Inquiring Minds via iTunes or RSS. You can also follow the show on Twitter at @inquiringshow and like us on Facebook.