This essay is the cover story for our March/April issue. Portions of it appeared earlier here and here.

A country riven by ethnic tension. Spontaneous protests driven by viral memes. Violence and riots fueled by hateful fake-news posts, often about “terrorism” by marginalized groups.

It’s a story we’ve seen play out around the world recently, from France and Germany to Burma, Sri Lanka, and Nigeria. The particulars are different—gas prices were the trigger in France, lies about machete attacks in Nigeria—but one element has been present every time: Facebook. In each of these countries, the platform’s power to accelerate hate and disinformation has translated into real-world violence.

Americans used to watch this kind of stuff with the comforting conviction that It Can’t Happen Here. But we’ve learned that the United States is not as exceptional as we might have thought, nor are contemporary societies as protected from civil conflict as we had hoped.

Just picture a reasonably proximate scenario: It’s the winter of 2020, and Donald Trump—having lost reelection by a margin closer than expected—is in full attack mode, whipping up stories of runaway voter fraud. Local protest groups coalesce around Facebook posts assailing liberals, murderous “illegals,” feminists. (This is basically what happened in France last year with the “anger groups” that birthed the yellow vest protests.) Pizzagate-style conspiracy theories race through these groups, inflaming their more extreme members. Add a population that is, unlike those of France and Nigeria, armed to the teeth, and the picture gets pretty dark.

In other words, though it has already facilitated the election of a demagogue committed to stoking racial prejudice, enriched his family, and sold out America’s national interest, social media may not yet have shown us the worst it can do to a divided society. And if we don’t get a handle on the power of the platforms, we could see worse play out sooner than we think.

This prognosis may sound grim, but it’s not intended to get you stockpiling canned goods or researching New Zealand immigration law. It’s simply to ratchet up the urgency with which we think about this problem, to bring to it the kind of focus we brought to other times when a single corporation—Standard Oil, AT&T, Microsoft—amassed an unacceptable degree of power over the fate of our society.

In the case of social platforms, their power is over the currency of democracy: information. Nearly 70 percent of American adults say they get some of their news via social media. That’s a huge shift not just in terms of distribution, but in terms of quality control, too. In the past, virtually all the institutions distributing news had verification standards of some kind, no matter how thin or compromised, before publication. Facebook has none. Right now, we could concoct almost any random “news” item and, for as little as $3 a day to “boost” it via the platform’s advertising engine, get it seen by up to 3,400 people each day as if it were just naturally showing up in their feed.

This is no hypothetical. It’s precisely what Vladimir Putin’s minions, and the Trump campaign and its allies, did in 2016. And why not? Facebook showed them the way, dispatching staffers to campaigns to make sure they knew how to get exactly the messages they wanted in front of exactly the people most susceptible.

Facebook—and all of us—got a wake-up call when we learned how bad actors put those types of lessons into practice. But make no mistake: While Facebook and YouTube, Twitter, and the other platforms may have been genuinely shocked by what happened in 2016, disinformation and manipulation are not a bug in their businesses. It’s the very core of the model, which is why they will never fix it on their own.

Recall for a moment the commercial, ubiquitous across television last year: “We came here for the friends.” The narrator, sounding a little like a younger Mark Zuckerberg, skipped us through pictures of kids in braces, awkward bands, birthdays. Facebook was telling us its creation myth, and it almost felt true. Wasn’t it that way when we first made our accounts? Didn’t we marvel at the parade of connections that suddenly poked into our lives, reminding us of who we’d been, who we might become?

“But then something happened,” the commercial’s narrator intoned, and the screen filled up with the words “CLICKBAIT” and “FAKE NEWS.” Until finally, young Zuck put a stop to it: “That’s going to change…Because when this place does what it was built for, then we all get a little closer.”

It was a classic crisis communications campaign. Facebook was desperate to reset its brand, tarnished by Cambridge Analytica and Russian trolls. It was fashioning an alternative history of itself, one that it would relentlessly promote—via advertising and congressional testimony and carefully stage-managed interviews—throughout 2018. But very little about the actual record supports that story.

Watch: Facebook Here Together

Instead, what we know now is that, for years, Facebook has been aware that user data was being shared with outside actors and that its platform was being turned into a disinformation machine. Over and over, it had the option to address the problem and inform the public. And over and over, it chose to go the other way. Think back.

- Facebook was fully aware, back in 2015, that Cambridge Analytica and other companies had gained access to detailed, personal information about many millions of users. Facebook kept that knowledge to itself and even threatened to sue the Guardian when it finally broke the story more than two years later along with the New York Times.

- Facebook’s security chief investigated, as early as the summer of 2016, how the platform was being manipulated by Putin’s minions. Yet as late as April 2017, Facebook still downplayed and scrubbed his analysis of what the Russians had done.

- Two days after the 2016 election, Zuckerberg protested that it was “crazy” to think disinformation on his platform could have made a difference; “only a profound lack of empathy” with the electorate could lead one to think that way, he said. The admonition rings even more patronizing now that we know that his own employees had been concerned for months that Facebook had become the front line of information warfare.

- Russian influence operations put special emphasis on African American communities, using both Facebook and (Facebook-owned) Instagram with accounts such as @blackstagram_ to discourage people from voting. And the Russians didn’t slow down after the election: They simply switched gears and began deriding the Robert Mueller investigation.

- Meanwhile in Nigeria, Facebook’s push to make sure its app was the gateway to the internet for millions of new smartphone users set off a tide of fake news and hate speech that has led to multiple murders. “In a multiethnic and multireligious country like ours,” Nigeria’s minister of communications said in June 2018, “fake news is a time bomb.” (Sound familiar?) In response, Facebook launched a digital literacy program that partners with 140 secondary schools—less than 1 percent of Nigeria’s schools.

- Likewise, Sri Lanka begged Facebook to help rein in anti-Muslim propaganda, with little response, until violent mobs ransacked Muslim homes and businesses and the government shut down access to Facebook entirely—whereupon the company finally reacted.

- Last fall, Facebook chose the day before Thanksgiving to admit what it had thunderously denied for a week: that it had retained a conservative oppo firm (that maintains its very own fake-news site) to dig up dirt on its critics and link them to George Soros, the favorite target of anti-Semitic haters.

- As late as the summer of 2018, Facebook was letting Yahoo access your friends’ posts without telling you. It allowed Spotify and Netflix to read your messages without your consent and gave Apple access to contact and calendar information even when you had specifically disabled data sharing. It was doing all this despite having been embarrassed, dragged before Congress, and excoriated by users for exactly this kind of breach of trust.

The list goes on—from Brexit to Black Lives Matter, we keep learning of episodes where social media was used to spread disinformation and hate. The transformation of Facebook into a tool for manipulation was not something that, as the commercial claims, just happened. It was facilitated and concealed at every step by Facebook itself. And the actions of Facebook’s leaders make it difficult, even for those formerly inclined to giving Zuckerberg and Sheryl Sandberg the benefit of the doubt, to continue doing so.

Related: Your Timeline

A decadelong chronicle of lawsuits and leaks offers a window into the social-media giant and how its leader’s ethos to “move fast and break things” strained the public’s trust.

10/23/09

2010

10/17/10

Click here for the full timeline.

But Facebook has not just given aid and comfort to propagandists. It has hurt the antidote to fake news—real news. Review, briefly, the recent history of our industry. First, starting in the 2000s, came the giant migration of advertising dollars from publishers to Facebook and Google. Today, the two control an estimated 58 percent of the US digital ad market, with Amazon, Microsoft, and the like dividing up the rest and publishers representing barely a rounding error.

In large part as a result, there are now roughly 24,000 journalists working in America’s daily print newsrooms, down from some 56,000 in the early 2000s. And more and more of them work for hedge-fund owners who milk what remains of newspapers’ profits—mostly through layoffs—while further degrading coverage. Here in the Bay Area, all the daily papers except the San Francisco Chronicle are owned by one of these hedge funds, Alden Global Capital. There were once more than 1,000 journalists working for these papers, including 440 at the San Jose-based Mercury News, then one of the nation’s strongest regional outlets. Today there are 145 left across more than two dozen publications, covering a region of 7.6 million people.

It came, then, as an improbable bit of good news when, on November 6, 2014, Zuckerberg stepped in front of a microphone to describe how Facebook was going all in on news. The internal algorithm, which determines which of a zillion posts every day actually show up in your feed, had been reworked over the previous year and a half to deliver more content from publishers. Facebook, in Zuckerberg’s words, was aiming to be the “perfect personalized newspaper for every person in the world.”

This was a big deal. The social network seemed to recognize it was in the media business—the newspaper business!—and that had to be good news for the rest of the media, too.

Facebook CEO Mark Zuckerberg unveils a new look for the social network’s News Feed in 2013.

Jeff Chiu/AP

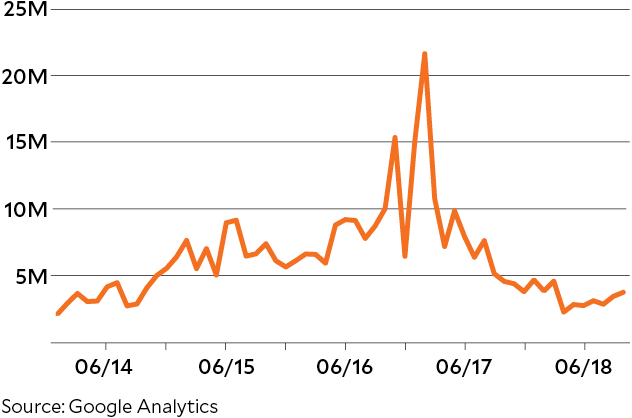

It certainly was for Mother Jones. Before that shift, up to 1.5 million users would land on one of our stories from Facebook each month. By the end of 2014 (thanks to strong reporting, a killer social team, and a lift from the algorithm) that number had jumped to 4 million, then 5 million. We were reaching people who had never gone to our website, never seen our print magazine. And while some would only glance at one article and never return, many others were reading deeply.

They were sharing, too. Lacking a huge brand or marketing budget, MoJo had never been able to get our reporting to all the people who might be interested in it. Now, readers became our marketing team, and each share was a signal to the algorithm to show our stories to even more people.

For us, this was a way to reach more people with investigative reporting. But for many newsrooms—especially those dependent primarily on advertising revenue—the urge to feed Facebook changed the way news was produced. First came clickbait and the rise of entire companies (Upworthy and the early versions of BuzzFeed and HuffPost) built entirely around getting you to click, like, and share. Millennial-focused PolicyMic dropped the “policy” and became a click machine monetizing progressive outrage with what one staff writer called “clickbait Mad Libs” stories. Super-shareable bits that pushed emotional buttons, from warm and fuzzy hope to anger and fear—the “information equivalent of salt and fat,” as Danny Rogers of the Global Disinformation Index puts it—became the ticket to business success.

Then, as part of one of Zuck’s pet projects, Facebook pushed publishers to “pivot to video” and even paid some news organizations to make videos (for Mic, those payments are reported to have been as high as $5 million in a single year), with the predictable result that newsrooms laid off writers en masse and beefed up video teams. Never mind that Facebook, as we wouldn’t learn until much later, was dramatically overselling the number of minutes people actually spent watching videos on the platform. The site’s gravitational force had become so strong that its every move changed the orbits of those around it.

All along the way, as Facebook pumped headlines into your feed, it didn’t care whether the “news” was real. It didn’t want that responsibility or expense. Instead, it honed in on engagement—did you share or comment, increasing value to advertisers? Truth was optional, if not an actual hindrance.

But as with actual salt and fat, we humans didn’t evolve as rapidly as our information diets did. We were still looking for information when the platforms were giving us engagement. People Googling “Hillary Clinton” in 2016 wanted to know about a candidate. When the top search results led them to a Breitbart piece on Benghazi, which generated a recommendation for an Infowars video, which sucked them into a wormhole of conspiracy chats, they weren’t thinking, “I’m being shown engaging content.” They were reading a perfect personalized newspaper.

This is what Zuckerberg and the other platform chiefs still haven’t grappled with: Their tools are great at helping you find content but not truth. (Even YouTube’s app for kids, as Business Insider discovered, recommended conspiracy videos about our world being ruled by reptile-human hybrids.)

Facebook et al. became the primary sources of news and the primary destroyers of news. And they refused to deal with it because their business is predicated on the fallacy that technology is neutral—Silicon Valley’s version of “guns don’t kill people.”

Having denied and deflected the problem of information warfare when the wolf was at the door, by early 2017 Facebook’s leaders were finally beginning to freak out. They gave speeches about how important quality journalism was. They put money into media literacy. They hired Campbell Brown, an ex-anchor with GOP ties, to run a “news partnerships” initiative.

And then they delivered the sucker punch. In January 2018, Zuckerberg announced what amounted to the end of the “perfect personalized newspaper”: Facebook was pivoting back to friends. The algorithm would ramp up the number of posts from individuals a user was connected with and dial way back on news. Not the fake kind—the real thing.

Today, you are far less likely to see posts from Mother Jones or any other publisher than you were two years ago, even when you’ve specifically followed that page. For many serious publishers, Facebook reach has plummeted—so much so that some are even breaking their rule against disclosing internal analytics. Slate recently revealed that in May 2018, it saw 87 percent fewer Facebook referrals than it had in early 2017. Other news organizations have taken a hit in the same range.

This means that people are getting less news in their feeds, right at a time when news is more important than ever. And because, with the stroke of an algorithm, Facebook erased a huge part of publishers’ audience, it also vaporized much of what was left of the revenue base for journalism. It’s no accident that just a couple of months ago, Verizon revealed that its digital media division—which includes AOL, Yahoo, and Tumblr along with journalism shops like HuffPost and Engadget—was worth about half as much as the nearly $9 billion it had previously been valued at. Layoffs soon followed. RIP the dream of “monetizing audiences at scale.”

Mother Jones Page Views From Facebook

For MoJo, this decline is significant but not catastrophic. Facebook never was the primary driver of what we do, and we have lots of other ways to get our stories to people, from social platforms to newsletters, podcasts, and, you know, print. Even so, the decline in Facebook audience over the past 18 months translates into a loss of at least $600,000—and for other publishers, the hemorrhaging has been far worse. The depressing tale of how Mic rode Facebook all the way up to a fantasy valuation of $100 million, and then all the way back down to a fire sale and the brutal dumping of its entire newsroom, is just one example.

It’s important to be clear that this is not about Facebook—or the other platforms—having actual malice toward news. There are many people inside these companies who recognize the problems they’re creating, and some of them chafe at not being able to talk about them more publicly. There are also many who seriously want to support sound information and have worked hard on initiatives to do that. But at least so far, those efforts haven’t matched the damage done.

Part of the reason is that Facebook has few incentives to prioritize being a responsible player in the information ecosystem. It’s a publicly traded company required to create value for shareholders, not the public. Maximizing engagement, whether it’s with baby photos or hateful memes, helps create that value.

It’s also become clear that Zuckerberg doesn’t fundamentally grasp the difference between journalism and propaganda. Last May, he explained to a roomful of journalists that “a lot of what you all do is have an opinion.” Facebook, he said, is just providing space for many opinions.

It was an almost Trumpian kind of nihilism: There is no truth. There are no facts. There is just “somebody’s version of the truth,” in Rudy Giuliani’s immortal phrasing. Everywhere you look, this truth-agnostic approach pervades the way Facebook and the other platforms have operated.

When Facebook’s vice president of global public policy, Joel Kaplan, showed up conspicuously sitting behind Brett Kavanaugh in the justice’s confirmation hearings, many Facebook staffers took it as a signal that the company was throwing its weight behind someone credibly accused of sexual assault. Facebook leadership insisted Kaplan was there simply in a personal capacity. (“Please don’t insult our intelligence,” one staffer countered.) Kaplan ended up throwing Kavanaugh, his friend from back in the Bush administration, a party upon his ascent to the court.

But aside from providing a cameo of entitlement, the moment also encapsulated how conservatives have managed to keep Facebook constantly seeking to appease them, despite (or rather because of) its perceived liberal bias. It’s a classic case of working the refs: Yell about being treated unfairly, and people will strain to prove otherwise. An executive with another social platform told us privately that his company had found an “elegant” solution to suppress the spread of disinformation via its algorithm—but that wasn’t immediately implemented, the person said, because of fear that it would become “a political issue” with censorship charges from the right. Likewise, the Wall Street Journal reported that Kaplan helped kill a project to help people communicate across political differences because it might patronize conservatives, who might not agree with Facebook’s definition of “toxic” speech. He also reportedly pushed for the Daily Caller, an unadulterated propaganda shop co-founded by Tucker Carlson, to be part of Facebook’s fact-checking program.

Supreme Court nominee Brett Kavanaugh arrives to testify before the Senate Judiciary Committee on September 27. Seated second row, second from left, is Joel Kaplan, Facebook’s vice president for global public policy.

Win McNamee/Pool Photo/AP

As it happens, conservative entities like the Daily Caller are thriving in the everything-is-opinion world Facebook created. Not long after the midterm election, we checked in on the CrowdTangle tool, which lets you see high-performing posts on Facebook. Of the top 50 stories from US publishers, 15 were screeds from the Daily Caller, Fox News, the Daily Wire, and Breitbart. All other US newspapers, magazines, and broadcast and cable networks combined accounted for another 16. The rest were clickbait from the likes of LadBible and TMZ.

Our sample happened to be from the day after a photo of a mother and her young daughters fleeing tear gas at the US-Mexico border went viral. Among the top-performing posts on Facebook was a Daily Wire piece suggesting, with zero evidence, that the photo had been staged. It had accumulated nearly 55,000 likes, comments, and shares. A BuzzFeed interview with the actual family (revealing that they were not staging their terror) had fewer than 7,000.

This pattern holds true day after day—and it’s worse when you zero in on politics. Of the top 20 political news posts on any given day, more typically come from conservative outlets than from mainstream ones, with progressive voices barely breaking through at all. (Hat tip to the New York Times’ Kevin Roose, who has been keeping tabs on this for some time.) And the algorithm tends to choose the most inflammatory and reckless outlets and posts—you’re probably not going to find a thoughtful column by George Will or trenchant analysis from the American Conservative here.

So right-wing sites and clickbait dominate the platform that dominates American news consumption. And that same platform, despite its stated commitment to supporting “quality news,” keeps making it harder for people to find genuine journalism.

It’s tough to overstate how serious this is—and how much it differs from the conventional wisdom that Americans are just becoming “polarized” into left and right. The polarization evident in social-media news consumption is not between left and right. It’s between real news and propaganda (which does come in both conservative and liberal flavors, though the likes of Occupy Democrats are bit players compared with Fox News).

Facebook’s leaders are right about one thing, a talking point they’ve used relentlessly in recent months with regard to the disinformation crisis: “These aren’t problems you fix; they are problems you manage.” There are no easy solutions, as Zuckerberg acknowledged in a recent post about Facebook’s work on artificial intelligence to suppress “harmful content” like images of unclothed breasts or terrorist messaging. The AI is getting better at ferreting out other kinds of bad stuff—like hate speech—too, he noted, but it flags this only about 52 percent of the time, compared with 96 percent for nudity. Say that number gets all the way to 60 or even 70 percent—is it enough?

Of course not. Even if Facebook becomes better at weeding out, say, voter suppression or racist hate, history does not suggest it will deploy this capability in ways that could hurt its bottom line. It won’t make itself less dominant in the way people access information about the world or more cautious about using the data it has on us. And, for that matter, we shouldn’t let it solve these problems for us. We shouldn’t expect it to be the arbiter of how much news we see in a day or how much distortion is okay.

We need to take control of our information environment before it takes control of us. That requires government to do its job—regulation, antitrust action, the full array of tools that democracies have used in the past to rein in the power of corporations. It also requires the rest of us, including those who produce news, to step up. We need to build business models strong enough to support journalism regardless of the whims of an algorithm, and to stiffen our spines when the targets of our reporting scream “bias” or “fake news.” We need the resolve to go after important stories before they go viral, and to resist he-said-she-said evasions. We need the confidence and money to fuel the work.

And you, who are reading this right now, can use the power of your dollars and time to build reporting at MoJo and elsewhere that strengthens democracy, and to withdraw support (and eyeballs) from platforms or content that don’t. These are “problems you manage.” It’s time to stop letting Facebook manage them for us.