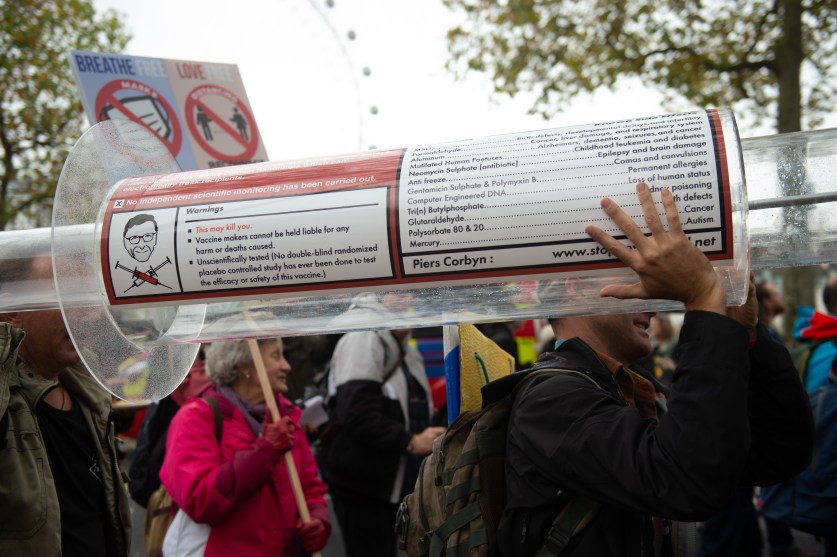

Anti-lockdown conspiracy theorists rally in London.Justin Ng/Avalon/ZUMA

This story was originally published by the Bulletin of the Atomic Scientists and is reproduced here as part of the Climate Desk collaboration.

The COVID-19 vaccine will not make you go blind or cause infertility. It does not contain a microchip or fetal tissue. It is not a Bill Gates scheme to get richer quick.

Those are some of the rumors that have spread like wildfire on social media in recent months. According to a report from the nonprofit coalition First Draft, which analyzed more than 14 million social-media posts mentioning vaccines or vaccination during a three-month period last year, two vaccine narratives have emerged: One narrative emphasizes safety concerns, and the other focuses on mistrust of the people and institutions involved in vaccine development.

Facebook and its subsidiary Instagram “drive vaccine discourse on social media,” including conspiracy-related content, First Draft reported. Facebook has taken some recent actions to try to stop the spread of vaccine misinformation on its platforms. But some independent researchers have decided to take matters into their own hands and are testing ways for “infodemiologists” to intervene directly on Facebook.

Last April, Facebook reported that it was working with more than 60 fact-checking organizations to detect false claims, hide some groups and pages from Facebook’s search function, and add warning labels with more context. In December, the company banned some COVID-19 vaccine-related content, such as allegations that the vaccine contains fetal tissue or bears the Antichrist’s “mark of the beast.”

This month, Facebook announced that it has consulted with leading health organizations and is expanding its efforts to remove false claims on Facebook and Instagram about COVID-19, vaccines for the disease, and vaccines in general. Facebook’s list of debunked claims now includes claims such as the notions that it is safer to get COVID-19 than to get the vaccine, and that vaccines cause autism.

“The goal of this policy is to combat misinformation about vaccinations and diseases, which if believed could result in reduced vaccinations and harm public health and safety,” Facebook said.

Accounts that repeatedly share debunked claims may be suspended by Facebook. The company recently banned Robert F. Kennedy Jr. from Instagram, where he had more than 800,000 followers, for repeatedly sharing misinformation about vaccine safety and COVID-19. He is not banned from Facebook, though.

Outside intervention

Will Facebook’s actions slow the spread of vaccine misinformation? Some experts question whether the social media giant is doing enough. Others point out that Facebook’s efforts have backfired at times. For example, Facebook has blocked some pro-vaccine ads, including from public health agencies, unintentionally identifying them as “political” messages.

The First Draft analysis of social-media posts about vaccination focused mostly on unverified Facebook pages—those that Facebook has not authenticated as belonging to legitimate businesses or public figures. However, the most popular posts on Facebook “tend to be dominated by established news sources,” the report noted.

On those verified news pages, a nonprofit organization unconnected to Facebook has begun testing online interventions to combat vaccine misinformation. The organization, Critica, has partnered with Weill Cornell Medicine and the Annenberg Public Policy Center of the University of Pennsylvania on a pilot program, funded by the Robert Wood Johnson Foundation, that is deploying “infodemiologists” to respond directly when misinformation about vaccines is posted by Facebook users commenting on news articles.

“Infodemiologists” are health experts who fight false information in a similar way to how epidemiologists with the CDC’s Epidemic Intelligence Service fight epidemics. They serve on the front lines, springing into action when they detect an outbreak that threatens public health.

Critica is hiring graduate students and post-doctoral fellows with science backgrounds and training them in communication techniques they will use to respond in real time to misinformation, within the cultural frames of the communities where the misinformation arises, says David Scales, a sociologist and physician at Weill Cornell Medicine/New York-Presbyterian Hospital who serves as chief medical officer for Critica. When they intervene on Facebook, these part-time infodemiologists will identify themselves as part of a research project and provide a link to the Critica study.

For example, suppose a news organization posts an article on Facebook discussing whether pregnant women should be vaccinated against COVID-19, and a commenter responds: “I can’t imagine giving the vaccine to animals, let alone people.” An infodemiologist might then jump into the conversation to acknowledge the commenter’s concern and ask questions to better understand it: “I’ve heard from a lot of people hesitant about getting the vaccine. Is there anything that has you worried? Would anything make you more likely to get it?”

Scales says the goal isn’t to get into a debate or to change the mind of the commenter, particularly if it is someone who holds strongly anti-vaccine views.

What the researchers are aiming for is a respectful, non-belittling interaction in which “the bystanders, the people who are on the fence and are watching that conversation, will start to see reputable information, or at least see the misinformation debunked in a way that they might be able to perceive it.” When someone in the conversation makes incorrect or misleading statements, the infodemiologist will respond with scientific facts to ensure that bystanders are not left misinformed.

The study has just begun, and Critica is still developing the protocols and training manuals for it. “We are drawing from a wide range of literature on things that have been shown to be useful in discussions with people who have vaccine hesitancy or resistance, or even flat-out anti-vaccine views, as well as literature on the best ways to rebut and address misinformation,” Scales says.

For now, the researchers are focusing on Facebook “to really try to solidify the model before we start taking it to other platforms,” Scales says. But eventually they plan to expand to other platforms where misinformation is also rampant.

A threat to health

False rumors about COVID-19 vaccines may seem harmless or even humorous. Twitter users have had fun with the microchip rumor, for example.

But when false information becomes rampant, as in today’s “infodemic,” it becomes difficult for the general public to sort fact from fiction. Eventually, that can lead to a breakdown in public trust.

The COVID-19 vaccine, for example, will be most effective if most people get vaccinated. If false claims make large numbers of people reluctant to get vaccinated, that impairs the entire population’s ability to recover from the pandemic, including people who have agreed to be vaccinated.

Critica hopes its interventions will encourage people to make vaccine decisions based on scientific evidence, but you don’t have to be an infodemiologist to help stop the spread of potentially harmful vaccine misinformation information on social media. The World Health Organization has posted quick links that help users report false or inappropriate content on nine of the most popular social medial platforms.

Want to mark a Facebook vaccine rumor about Bill Gates as false news, for example? Here’s how. If you don’t mind having a microchip at your fingertips, that is.