Pavlo Gonchar/SOPA Images via ZUMA

Last week, Facebook announced that it would stop giving special treatment to politicians. The policy change was greeted as big news. The Verge, which broke the story last Thursday, explained that Facebook will “end its controversial policy that mostly shields politicians from the content moderation rules that apply to other users.” Later that evening, the Washington Post called the changes a “major reversal.”

Upon closer inspection, however, there’s no assurance that the shift will have much of an impact on what politicians can post or how they are treated. Facebook allowed politicians to lie, bully, or otherwise break its code of conduct in the past, and nothing in the new announcement suggests it will stop them from doing so in the future.

“They have this very peculiar practice of making very large policy announcements that amount to very little,” says Tim Karr, a senior director at Free Press, a digital rights group that has been critical of Facebook’s content moderation practices. The policy change “basically gives them the ability to go back to their usual practices, which is to give special accommodations to the speech of politicians, even when it clearly violates their Community Standards.”

Last week’s announcement came in response to feedback the Facebook Oversight Board, a quasi-independent body funded by Facebook that was set up last year to make specific content moderation decisions for the company. The board took on its most prominent case earlier this year, involving Donald Trump. In the wake of the January 6 Capitol insurrection, Facebook suspended Trump from posting on Facebook and Instagram indefinitely, then asked its new Oversight Board if this was the right call. The company also asked the board how to handle suspending politicians more broadly. Last month, the board kicked the Trump decision back to Facebook, insisting “that Facebook apply and justify a defined penalty.”

On Friday, Facebook announced that decision: Trump’s suspension would last two years, at which point the company would assess the safety of Trump’s return to the platform. If he returns, he will be subject to more severe penalties—including more suspensions—for violating the company’s policies, according to new rules for restricting the accounts of public figures during civil unrest, created in response to the board’s ruling. The new guidelines establish punishments for dangerous politicians around the world—if Facebook chooses to enforce them.

Facebook also responded to a list of recommendations from the Oversight Board. The change that made the biggest splash was on the issue of “newsworthiness,” a term Facebook has used to exempt politicians from rules that govern regular users of the platform. Facebook created the exemption in 2016, after Facebook moderators removed the Pulitzer Prize–winning Vietnam War photograph known as “Napalm Girl” posted by Norway’s largest newspaper because it violated the company’s rule against nudity. In response to criticism, Facebook restored the image and announced an exception for “newsworthy” material.

But the Washington Post reported last year that the newsworthiness exemption had actually come about as an off-the-books practice in 2015 in response to Trump’s call for a ban on all Muslims entering the United States, suggesting that it has always been inextricably linked to Trump’s dangerous behavior on the platform. Even though Trump is off it for now, the allowances for politicians have not fully gone out with him.

In 2019, Facebook announced that all politicians would be presumed “newsworthy,” meaning their posts could remain up even if they violated Facebook’s rules. “From now on we will treat speech from politicians as newsworthy content that should, as a general rule, be seen and heard,” Nick Clegg, vice president for global affairs, announced that September. This meant that content that violated Facebook’s rules would not be removed unless the risk of violence outweighed the benefit of leaving it up for people to see. Clegg also explained that politicians’ posts and paid ads were not subject to the company’s independent fact-checking process, an exemption he said had been official since 2018. Put plainly, politicians could lie on Facebook.

So it seemed a major about-face when, on Friday, Facebook announced that posts from politicians would no longer automatically be considered newsworthy. But the proof of any real change is in the details. Facebook very rarely officially applies this exemption. It has acknowledged applying it to Trump only once, when the company decided to leave up a video of a Trump rally in which the then-president called out an attendee as overweight, violating the company’s policy against bullying.

“We grant our newsworthiness allowance to a small number of posts on our platform,” Facebook wrote Friday in a response to the Oversight Board. The company went on to detail what it will do differently now. “When we assess content for newsworthiness, we will not treat content posted by politicians any differently from content posted by anyone else,” it stated. “Instead, we will simply apply our newsworthiness balancing test in the same way to all content, measuring whether the public interest value of the content outweighs the potential risk of harm by leaving it up.”

In other words, the exemption may still be implemented for politicians at the company’s discretion. And since it was officially used so infrequently, it’s unlikely that it will affect Facebook’s policy enforcement toward politicians outside very rare situations. Facebook says it will begin reporting when it uses the exemption next year—a shift that may provide transparency around the policy but it does not promise any substantial changes in how politicians are treated. This is “writing rules that basically give them an exit to actually having to enforce those rules,” says Karr.

Facebook’s creation of the Oversight Board is understood as an attempt to prove that self-regulation is a viable alternative to increased government regulation. Pushing that narrative, Facebook says that this change, and others announced last week, are a sign of the Oversight Board’s ability to bring big change to the company. “The Oversight Board’s decision is accountability in action,” Clegg wrote in announcing some of the changes. “It is a significant check on Facebook’s power, and an authoritative way of publicly holding the company to account for its decisions.” He added, “As today’s announcements demonstrate, we take its recommendations seriously and they can have a significant impact on the composition and enforcement of Facebook’s policies.”

But at least in the immediate term, the imbalance between what is allowed for politicians and allowed for regular users remains stark. As one example, Shireen Mitchell, who founded Stop Online Violence Against Women, a nonprofit that tracks online harassment, points to the ongoing problem of people of color having posts taken down or being suspended from Facebook for using the term “white people.” Mitchell says her organization still gets reports of these takedowns. At the same time, she adds, Trump has been allowed to attack Black people, including his threat last May during protests over George Floyd’s murder that “when the looting starts, the shooting starts.” The phrase is generally attributed to a bigoted Miami police chief who used it in 1967 to threaten civil rights protesters.

Officially, the newsworthiness exception was not invoked in this case, showing the latitude the company gives to politicians without explicitly applying a newsworthiness test, and the scant difference the elimination of that test will make. In this case, Zuckerberg had personally determined that the post didn’t violate Facebook policy.

“That is a threat, that’s incitement to violence to Black people, period,” Mitchell says. “That is a historical threat for any Black people at any time in our history if we decide to protest the government, which we have the right to do.” The post remains up today.

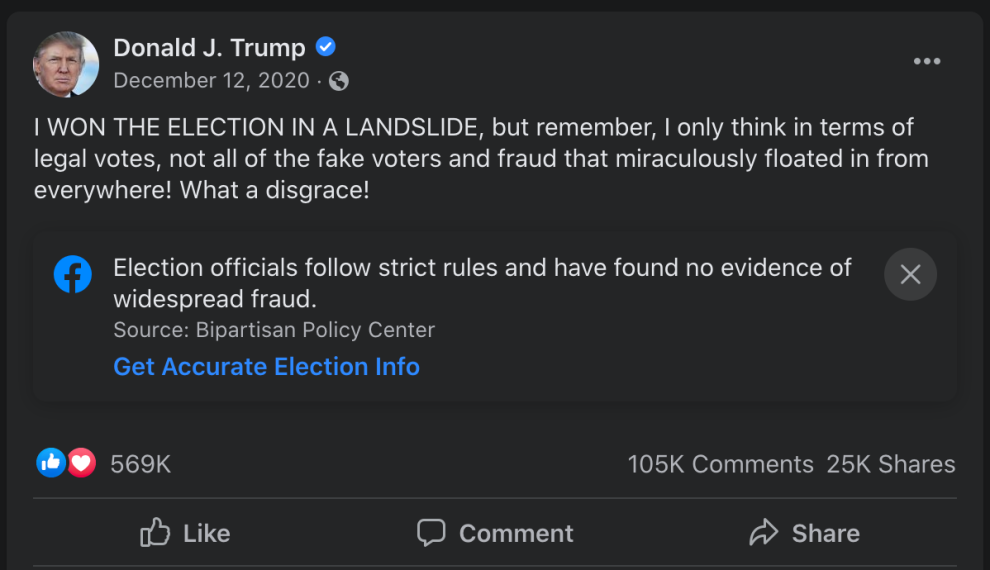

Perhaps just as importantly, Facebook has stuck with its decision not to fact-check posts or ads by politicians. Though officially not part of the newsworthiness policy, this exemption played an important role in Trump’s ability to foment a violent insurrection on January 6. In the year preceding the attack, Trump posted 177 times about a stolen or fraudulent election, according to a report by the left-leaning watchdog Media Matters, which garnered a total of 74.4 million interactions on the platform. After the election, Facebook began affixing fact-checking labels to his claims of a stolen election, but the posts remained up—and still are today. Internal data showed that the labels did little to stop the spread of Trump’s popular posts. Instead, the lies helped build the narrative that fueled the January 6 attack.

Trump also posted frequent misinformation about COVID-19 on Facebook. This spring, the global nonprofit activist group Avaaz found that Facebook’s policy against fact-checking politicians’ ads contributed to misinformation in the Georgia Senate race, allowing content that had been debunked by Facebook’s fact-checkers to be spread to millions of Georgia voters. None of this ever reached the level of an official newsworthiness exemption, but it’s a clear sign of the incredible power that politicians wield on the platform. If Facebook wanted to mitigate the harm that politicians like Trump can cause, it would more directly target the ability to lie in ads and posts.

Zuckerberg has repeatedly said that his company should not be the “arbiter of truth” or referee political debates. Karr sees the issue of fact-checking as one of Facebook’s most difficult problems. “I think Facebook is afraid of fact-checking because it knows that to do so would be to open Pandora’s Box in a way that is really unmanageable,” he says, noting that the volume of content from politicians alone may be impossible to keep up with. “So it has run from that responsibility.”

If it’s an intractable problem, it’s one Facebook created. Now, instead of finding a solution, the company simply claims it has made big changes. The proof, however, is not in the new policy but in what comes next.