Jose Hernandez and Beatriz Gonzalez, stepfather and mother of Nohemi Gonzalez, who died in a terrorist attack in Paris in 2015, arrive to speak to the press outside of the US Supreme Court following oral arguments in Gonzalez v. Google.Drew Angerer/Getty Images

Thanks to the 26-word legal provision known as Section 230 of the Communications Decency Act, social media companies have long enjoyed broad immunity from lawsuits over what individual users post on their platforms. On Thursday, the Supreme Court left that liability shield intact, sidestepping a contentious legal and political fight that at one point seemed likely to result in one of this term’s most important rulings.

Section 230 was written in 1996, decades before you could watch an Instagram reel, text your friends Memojis, or be two clicks and two hours away from having paper towels delivered to your doorstep. The legislation’s drafters had intended the statute to protect internet platforms (at the time, they were mostly rudimentary internet chat forums) from being legally responsible for what users post online if the platforms were not successful in moderating out all bad content.

But in the modern internet age, the statute has far greater implications: Section 230, for example, has protected TikTok from being held civilly responsible for user-generated videos encouraging kids to choke themselves until they black out. Several kids who allegedly participated in the challenge died. The statute, according to Bloomberg, has also been used to dismiss a lawsuit blaming Snapchat for connecting children to drug dealers who provided pain killers that turned out to contain fatal doses of fentanyl.

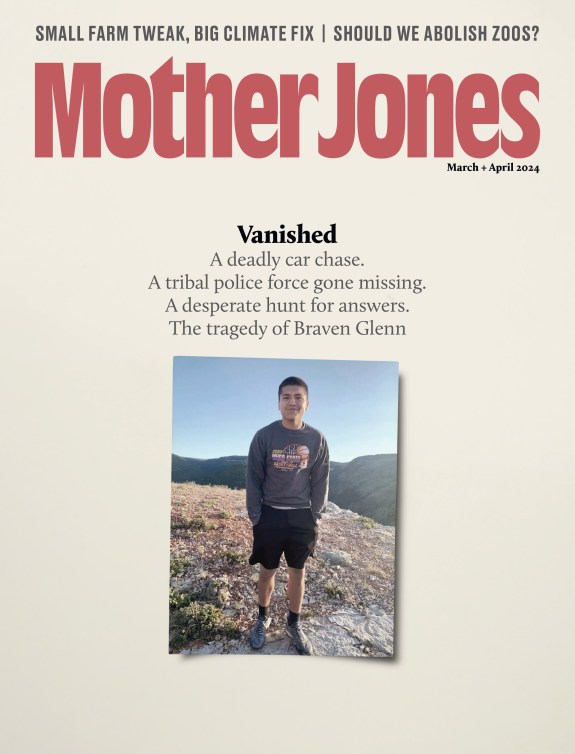

In February, the Supreme Court for the first time considered the scope of Section 230’s broad legal protections. The case focused on the death of Nohemi Gonzalez, an American student who was killed in a Paris terrorist attack in 2015. Gonzalez’s family argued that YouTube, a Google subsidiary, indoctrinated ISIS members by using the tech giant’s proprietary algorithm to feed would-be terrorists endless propaganda videos posted by individual users. Gonzalez’s family argued that this constituted YouTube’s own speech, and thus should not be protected by Section 230.

Google, in turn, argued that any new legal interpretation limiting Section 230’s reach would cause social media companies to either over-moderate their platforms or not moderate them at all—harming internet innovation, user experience, and even the larger economy.

“Undercutting Section 230 would lead to businesses and websites being unable to operate and to more lawsuits that would hurt publishers, creators, and small businesses,” Halimah DeLaine Prado, a lawyer for Google, wrote in a blog post before the oral arguments. “That rising tide of litigation would reduce the flow of high-quality information on the internet, which has created millions of American jobs, innovative new ideas, and trillions in economic growth.”

During nearly three-hours of oral arguments in February, the Supreme Court justices seemed to struggle to grasp how they could draw a line that protects people from the harms of social media and tech companies’ algorithms without undermining the usefulness of the platforms. “From what I understand…algorithm suggests [what] the user is interested in,” Justice Clarence Thomas said at one point. “Say you get interested in rice pilaf from Uzbekistan. You don’t want pilaf from some other place, say, Louisiana.”

“We’re a court. We really don’t know about these things,” Justice Elena Kagan added. “These are not like the nine greatest experts on the internet.”

It’s little surprise, then, that the justices chose not to rule on Section 230 at all. Instead, they issued a unanimous opinion on another, related, case involving Twitter and terrorism, ruling that the plaintiff had failed to show that Twitter had “aided and abetted” terrorism by not blocking certain content that validated terrorism. Because the Gonzalez family’s argument that Google aided and abetted terrorism through its algorithm was “materially identical” to the allegation in the Twitter case, the court in a brief, unsigned opinion sent the case back down to a lower court and declined to even address the controversies surrounding Section 230.

For now, it seems, internet platforms can keep hosting and recommending content about pilaf—and terrorism—without worrying much about losing lawsuits.